Today, YouTube announced a way for creators to self-label when their videos contain AI-generated or synthetic material.

- Home

- Technology

- News

YouTube adds new AI-generated content labeling tool

YouTube previously said it would require creators to disclose AI-generated material in 2024. The labels will require creators to be honest about synthetic content.

The checkbox appears in the uploading and posting process, and creators are required to disclose “altered or synthetic” content that seems realistic. That includes things like making a real person say or do something they didn’t; altering footage of real events and places; or showing a “realistic-looking scene” that didn’t actually happen. Some examples YouTube offers are showing a fake tornado moving toward a real town or using deepfake voices to have a real person narrate a video.

On the other hand, disclosures won’t be required for things like beauty filters, special effects like background blur, and “clearly unrealistic content” like animation.

In November, YouTube detailed its AI-generated content policy, essentially creating two tiers of rules: strict rules that protect music labels and artists and looser guidelines for everyone else. Deepfake music, like Drake singing Ice Spice or rapping a song written by someone else, can be taken down by an artist’s label if they don’t like it. As part of these rules, YouTube said creators would be required to disclose AI-generated material but hadn’t outlined how exactly they would do it until now. And if you’re an average person being deepfaked on YouTube, it could be much harder to get that pulled — you’d have to fill out a privacy request form that the company would review. YouTube didn’t offer much about this process in today’s update, saying it is “continuing to work towards an updated privacy process.”

Like other platforms that have introduced AI content labels, the YouTube feature relies on the honor system — creators have to be honest about what’s appearing in their videos. YouTube spokesperson Jack Malon previously told The Verge that the company was “investing in the tools” to detect AI-generated content, though AI detection software is historically highly inaccurate.

In its blog post today, YouTube says it may add an AI disclosure to videos even if the uploader hasn’t done so themselves, “especially if the altered or synthetic content has the potential to confuse or mislead people.” More prominent labels will also appear on the video itself for sensitive topics like health, election, and finance.

Security forces kill 58 terrorists after coordinated attacks at ‘12 locations’ in Balochistan

- 4 hours ago

NDMA forecasts rain, snowfall in hilly areas

- a day ago

Amazon’s ‘free, no hassle returns’ issue results in over $1 billion settlement

- 15 hours ago

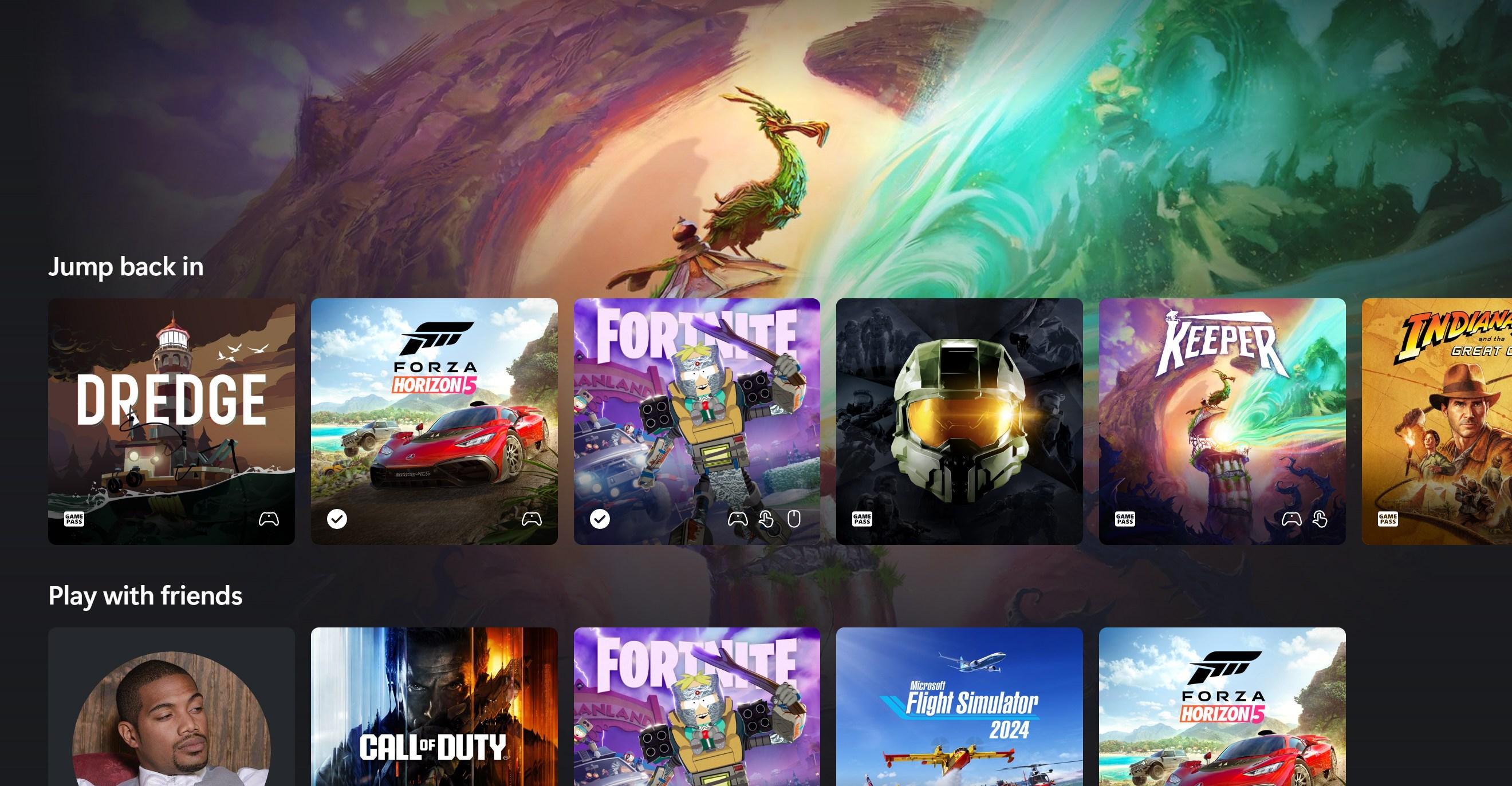

Xbox Cloud Gaming’s new design teases the future of Xbox console UI

- 15 hours ago

PM inaugurates Punjab Agriculture, Food and Drug Authority

- 4 hours ago

KP CM raises concern over absence of retina specialist at PIMS

- a day ago

Second T20I: Green Shirts beat Australia by 90 runs

- 2 hours ago

Renowned digital creator Syed Muhammad Talha shifts focus to filmmaking

- 3 hours ago

The Supreme Court will soon decide if only Republicans are allowed to gerrymander

- 13 hours ago

Gadecki, Peers win another Australian Open title

- 3 hours ago

Field Marshal, Turkiye’s chief of general staff discuss regional security, defence cooperation

- a day ago

Catherine O'Hara, star of 'Schitt's Creek' and 'Home Alone,' dead at 71

- 3 hours ago

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25342216/image2_k9yr5yM.width_800.format_webp.jpeg)