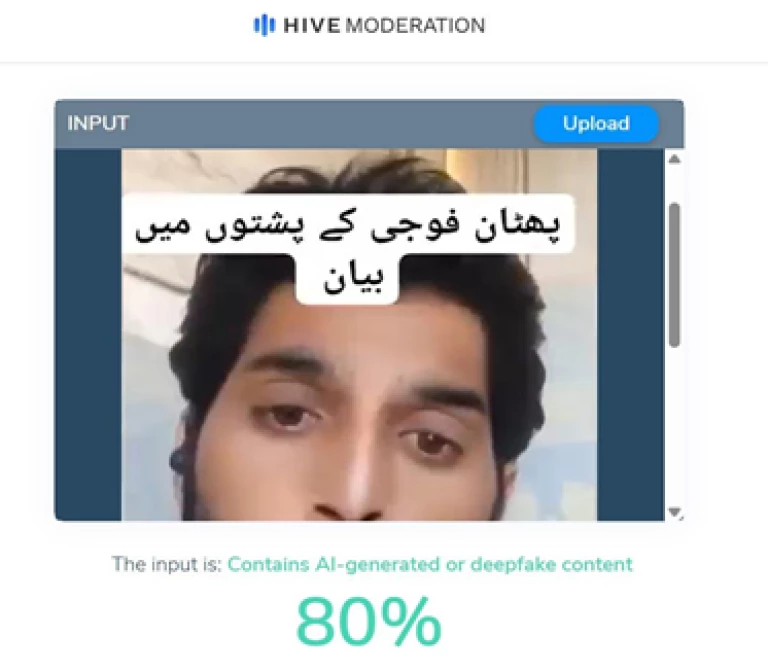

Close examination of viral video revealed several technical anomalies, indicating that it was generated using AI-based morphing and deepfake technology

On March 16, 2025, a video surfaced on social media in which a self-identified soldier claimed to have resigned from the Pakistan military after witnessing alleged atrocities committed against civilians. In the video, he stated that he had killed 12 civilians under orders from his superiors.

The footage quickly gained widespread attention, particularly among pro-Pashtun Tahafuz Movement (PTM) and Baloch separatist social media activists (SMAs), who further claimed that the soldier had been assassinated by intelligence agencies in retaliation for exposing military actions.

On March 17, 2025, social media activists aligned with opposing narratives began to investigate the claim. They revealed that the video had originally been posted on TikTok by the handle 'Parachinar News'. Subsequent forensic analysis and investigation showed that the video was, in fact, AI-generated, and the person shown was not a real individual. This fact-check report details the forensic examination of the video and exposes its fabricated nature.

The viral spread of the video led to multiple social media accounts falsely claiming that the individual in the footage had been killed by intelligence agencies as punishment for his confession. This narrative was aggressively propagated by accounts linked to anti-state propaganda, which sought to foster distrust and hostility toward state institutions. Lacking any credible evidence, this false claim further escalated tensions and intensified anti-state sentiment, particularly within targeted linguistic and regional communities. This deliberate spread of misinformation demonstrates how AI-generated content is strategically used to manipulate public perception and incite unrest.

Analysis of the Video

Misidentification of the 'Martyred Soldier'

In the wake of the video's viral spread, a photograph of an injured police constable was circulated, with claims that he was the soldier shown in the video, and that he had been 'eliminated' by intelligence agencies. However, the individual in the photograph was later identified as a police constable who had been wounded in an unrelated incident and was receiving medical treatment at the time the photo was taken. The constable even released a video confirming his survival and clarifying that he had no connection to the viral video.

This deliberate misidentification aimed to lend credibility to the AI-generated video and reinforce an anti-state narrative. The photo was manipulated to fit the fabricated story: key details, such as a police cap visible on the constable’s head in the original image, were cropped out to create the false impression that the individual was a soldier.

AI-Generated Video Analysis

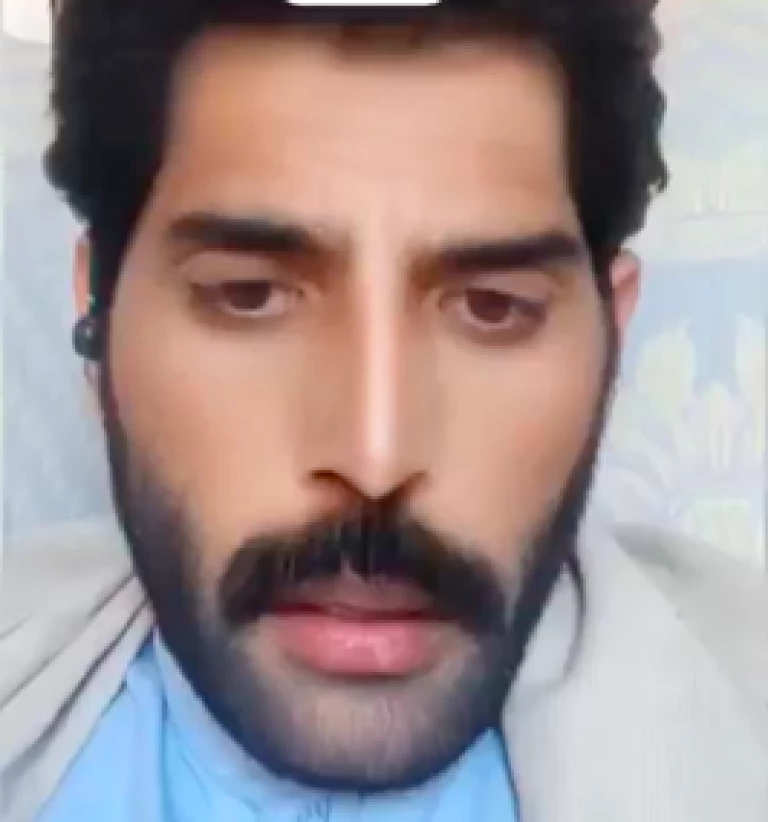

A close examination of the viral video revealed several technical anomalies, indicating that it was generated using AI-based morphing and deepfake technology. These inconsistencies include:

1. Facial Morphing and Image Manipulation:

Throughout the video, the individual's beard fluctuates inconsistently. In some frames, his chin appears slightly shaved, while in others, it seems to have grown back, an unnatural occurrence for a continuous recording. This suggests the use of AI tools to morph images and generate a fabricated facial representation.

2. Facial Expression and Eye Movement Inconsistencies:

The video includes a crying filter, amplifying the emotional tone of the message. However, there is no visible tear flow, and the facial expressions do not align with typical distress signals. The lack of muscle movement in areas such as the cheeks and forehead further supports the theory that the video was AI-generated.

3. Unnatural Appearance:

The subject’s lips appear unnaturally shiny and the sharp contours of his nose are out of place. These are typical flaws found in AI-generated content. Additionally, the smooth, flawless appearance of the individual’s face—lacking natural texture, pores, or blemishes—is another indicator that the video was digitally altered.

4. Eyebrow Inconsistencies:

The subject’s eyebrows initially appear thick and well-groomed but gradually become thinner and more spaced out as the video progresses. This is a common artifact of AI-generated images where facial features fail to maintain coherence.

5. Lip Sync Issues:

Close examination of the lip movements reveals a mismatch with the audio, which is a typical flaw in AI-generated content.

AI Detection Tools

Analysis using AI detection tools confirmed that the video was altered with filters and overlaid with someone else’s image to obscure the identity of the individual, further misleading viewers. This technique, often employed in deepfake propaganda, helps create a false narrative while avoiding immediate detection.

Absence of Military Credentials

The individual in the video failed to provide any verifiable proof of his identity as a soldier. There were no uniforms, ID cards, or official references to support his claims. Military resignations are formalized with official documentation, none of which was present in this case. The anonymity of the individual and the lack of verifiable credentials raise significant doubts about the authenticity of the video.

Coordinated Disinformation Campaign and Targeting

The video was initially uploaded by a TikTok account named ‘Parachinar News’. The dual-language strategy, disseminating the content in both Urdu and Pashto, indicates a deliberate attempt to stoke anti-state sentiments among specific linguistic groups. The promotion of this video by separatist social media activists points to a coordinated effort aimed at misleading the public and eroding trust in the military. Many of the accounts that shared the video had previously been linked to spreading anti-state narratives.

Based on the forensic evidence and digital footprint analysis, it is clear that the viral video was a product of AI-generated disinformation. This incident serves as a prime example of next-generation propaganda, where deepfake technology is exploited to manipulate public opinion and stir social unrest.

Key Takeaways

1. The individual in the video is a digitally altered AI-generated persona.

2. The video exhibits several inconsistencies, such as unnatural lip movements, fluctuating facial features, and a lack of emotional authenticity, all indicative of deepfake tools like Wav2Lip and DeepFaceLab.

3. There is no official military identification or proof to substantiate the soldier's claims, further discrediting the video.

4. The deliberate targeting of specific linguistic groups and the coordinated dissemination of the video suggest a planned disinformation campaign.

This case underscores the risks of AI-generated content and the potential for its use in spreading harmful, manipulated narratives.

It’s MAGA v Broligarch in the battle over prediction markets

- 5 hours ago

Money no longer matters to AI’s top talent

- 5 hours ago

Pakistan, US agree to enhance bilateral trade, economic ties, counterterrorism cooperation

- 18 hours ago

Magnitude 5.6 quake jolts parts of KP

- 14 hours ago

PM Shehbaz calls for independent, sovereign state of Palestine

- 19 hours ago

Why it's so difficult to break into the Premier League's top six

- 4 hours ago

What the arrest of former Prince Andrew can teach us about power and abuse

- 3 hours ago

PMD predicts dry weather in most parts of country

- 15 hours ago

Strong earthquake rattles eastern Afghanistan

- 13 hours ago

Gold prices continue to surge in Pakistan, global markets

- 19 hours ago

The hottest new winter sport is about to get even hotter

- 3 hours ago

The party-fication of productivity

- 3 hours ago