Regional

I’m an AI skeptic. But one critique misses the mark.

As California advances new AI regulations and companies continue to pour billions of dollars into building the most powerful systems yet, I hear a recurring grumble online: Why is AI being shoved down our throats? What is this good for? Does anyone actually w…

As California advances new AI regulations and companies continue to pour billions of dollars into building the most powerful systems yet, I hear a recurring grumble online: Why is AI being shoved down our throats? What is this good for? Does anyone actually want this? In one recent Gallup poll, the percentage of Americans who think AI does more harm than good is twice that of those who think it does more good than harm. (However, ‘“neutral” is the most popular answer.) Fed up with AI hype and with AI-generated text everywhere, a lot of us feel like AI is something tech companies are imposing on people who were perfectly happy before, thank you very much. Tech companies are definitely, unambiguously, being reckless. Only in the field of AI will people claim that their work is moderately likely to lead to mass deaths or even human extinction and then argue they should get to keep doing it totally unregulated anyway. I see where the public’s skepticism is coming from, and I’m skeptical too. But these very real problems with AI don’t make every single complaint about AI correct, and something about the “this is being shoved down our throats” complaint doesn’t sit right with me. One thing that’s easy to forget about generative AI is how new it all is. Ten years ago, none of the tools now in everyday use existed at all. Most of them didn’t exist five years ago, or they were little more than useless party tricks. Two years ago, some early versions of these tools had been developed — but almost no one knew about them. Then, OpenAI gave ChatGPT a friendly (rather than forbiddingly scientific) interface. Two months after its launch, the app had 100 million active users. --- This story was first featured in the Future Perfect newsletter. Sign up here to explore the big, complicated problems the world faces and the most efficient ways to solve them. Sent twice a week. --- Organic enthusiasm created a world seemingly replete with AIs. A new AI technology captured the public imagination overnight and droves of people started using it. It’s in response to ChatGPT that competitors doubled down on their own AI programs and released their own chatbots. You don’t have to like AI. Your skepticism is profoundly warranted. But the world we live in today is a direct product of ChatGPT’s meteoric rise — and like it or not, that rise was driven by the massive number of people who want to use it. There are good reasons for generative AI enthusiasm — and very real drawbacks A lot of the resentment of AI I see bubbles up when you try to learn about something and run into a shoddy, AI-generated article mass produced for SEO. It is undeniably deeply annoying for high-quality text to be replaced by low-quality AI text. Especially when, as is often the case, it looks fine at first glance and only upon closer reading do you realize it is incoherent. Many of us have had that experience, and it does pose a serious threat to the culture of sharing authentic work that made the internet great. While “people trying to put AI slop in our faces” is a highly visible consequence of the AI boom, as is cheating on tests and the purported death of the arts, AI’s valuable uses are often less apparent. But it does have them: It’s ridiculously helpful to programmers, enables new kinds of cool and imaginative games, and functions as a crude copy editor for people who could never afford one. I find AI useless for writing, but I frequently use it to extract text from a screenshot or picture that I’d previously have had to type out myself or pay for a service to handle. It’s great at inventing fantasy character names for my weekend D&D game. It’s handy for rewriting text at an easier reading level so I can design activities for my kids. In the right niches, it does feel like a tool for the imagination, letting you skip from vague concepts to real results. And — again — this technology is incredibly new. It does feel a bit like we’re watching the first light bulbs and debating whether electricity is really an improvement or a party trick. Even if we manage to cease the race to build more and more powerful AI systems without oversight (and I really think we should), there is so much more to discover about how to effectively make use of the systems we have. AI chatbots are mocked while they’re mediocre, but if every single small business could cheaply have a functional live 24/7 customer service chat, that’d actually make it easier for them to compete with large businesses that already have those services. AI might well make that happen in the next few years. If people can more easily realize their ideas, that’s a good thing. If text they found unreadably confusing is now accessible to them, that’s a good thing. AI can be used to check the quality of work, not just generate mediocre-quality work. We don’t have high-quality automated text review for statistical errors and misconduct in scientific papers yet, but it’d be enormously valuable if we did. AI can be used to produce cheap, lousy essays, but it can also give fairly useful feedback on the first draft of a piece — something many authors wish they could receive but can’t. And many of the most annoying things about AI are a product of a culture that hasn’t yet adjusted to them and responded, both with regulation and with further innovation. I wish that AI companies had been more careful about deploying technologies that overnight made the internet less usable, but I also believe in our ability to adapt. Facebook spent three months full of baffling AI spam, but the company adjusted some content filters and now (on my feed, at least) the spam is mostly gone. The ease of pumping out meaningless marketing copy is a challenge for search engines, which used to assume that having a lot of text made a source more authoritative. But frankly, that was a bad assumption even before ChatGPT, and search engines will simply need to adapt and figure out how to surface high-quality work. Over time, all of the backlash and grumbling and consumer behavior will shape the future AI — and we can collectively shape it for the better. That’s why the AI outcomes that tend to worry me have to do with building extremely powerful systems without oversight. Our society can adjust to a lot — if we have time to react, adjust, regulate where appropriate, and learn new habits. AI may currently have more bad applications than good ones, but with time we can find and invest in the good ones. We’re only in real trouble if human values stop being a major input: if we stumble into giving away more and more decision-making power to AI. We might do that! I’m nervous! But I’m not too worried about there being lots of bad AI content, or the absurd number of pitch emails I get for unnecessary AI products. We’re in the early stages of figuring out how to make this tool useful, and that’s okay.

Continue Reading

-

World 2 days ago

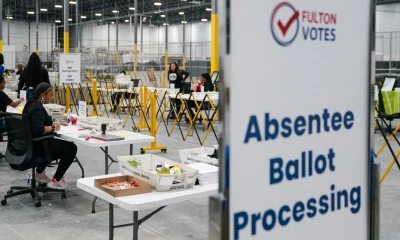

World 2 days agoRepublicans' Donald Trump elected 47th US president

-

Pakistan 1 day ago

Pakistan 1 day agoPakistani politicians felicitate Trump on victory as US President

-

Business 19 hours ago

Business 19 hours agoGold plunges Rs5,400 per tola in Pakistan as int'l market reacts to Trump’s victory

-

Pakistan 1 day ago

Pakistan 1 day agoNawaz Sharif to reach Geneva on four-day private visit

-

Pakistan 2 days ago

Pakistan 2 days agoPTI's Azam Swati rearrested after his release from Attock jail

-

Pakistan 1 day ago

Pakistan 1 day agoPakistani politicians congratulate Trump over winning 'second term' as US president

-

Pakistan 2 days ago

Pakistan 2 days agoNew Hajj policy approved

-

Business 1 day ago

Business 1 day agoMass relief as Nepra reduces power tariff